|

How to pronounce my name?Zhaoxuan -> jow sh-yen. Hi there, thanks for visiting my website!

I'm a I play with user data, including user-generated content and user behavior data, to personalize and enhance Large Language Models, as well as to detect suspicious user behavior. Please feel free to drop me an Email for any form of communication or collaboration!☘️

Email: CV / Google Scholar / Semantic Scholar / X (Twitter) / Github / LinkedIn |

|

|

|

|

| 2025 |

|

Zhaoxuan Tan, Zheng Li, Tianyi Liu, Haodong Wang, Hyokun Yun, Ming Zeng, Pei Chen, Zhihan Zhang, Yifan Gao, Ruijie Wang, Priyanka Nigam, Bing Yin, Meng Jiang Proceedings of ACL 2025. project page We proposed PUGC, a framework leveraging implicit human preferences in unlabeled user-generated content (UGC) for scalable and domain-specific alignment of LLMs, achieving significant performance improvements over traditional methods on alignment benchmarks. |

|

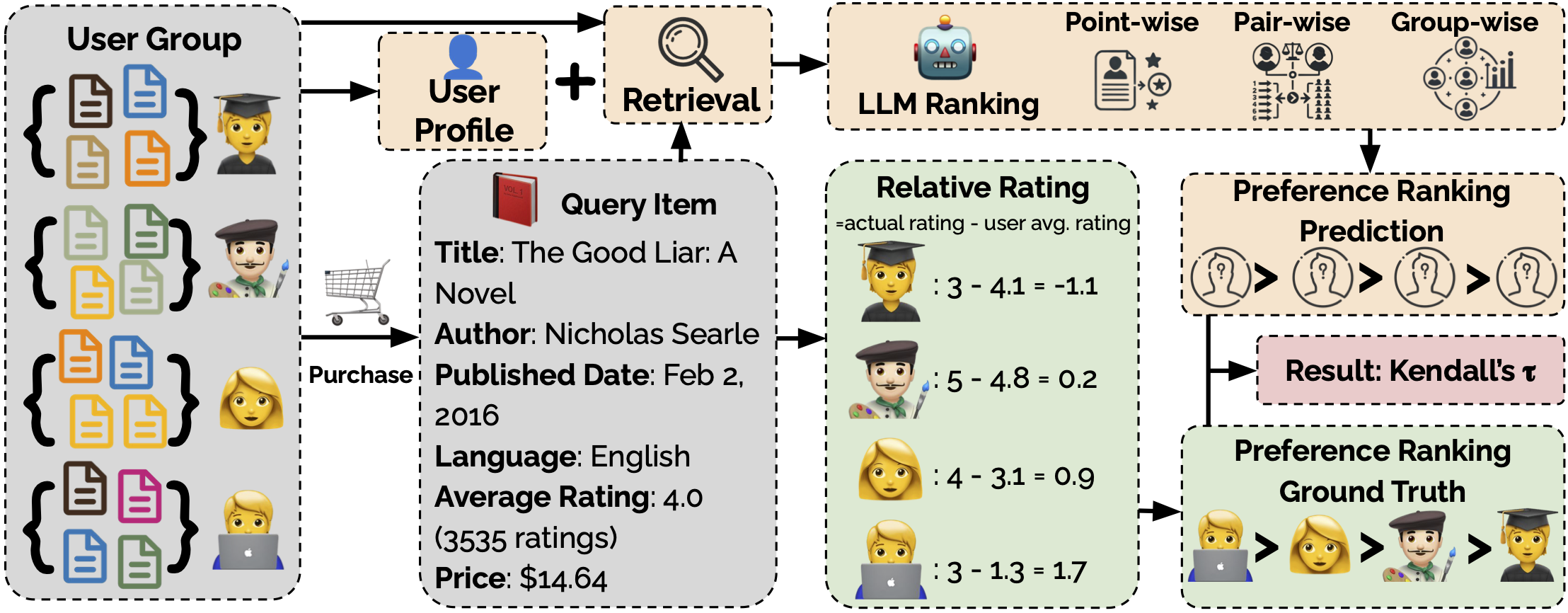

Zhaoxuan Tan, Zinan Zeng, Qingkai Zeng, Zhenyu Wu, Zheyuan Liu, Fengran Mo, Meng Jiang arXiv preprint 2025. We proposed PerRecBench, a benchmark that isolates user rating bias and item quality to evaluate recommendation techniques in a grouped ranking manner, revealing that while larger LLMs perform better overall, they struggle with personalized recommendation, emphasizing the need for improved fine-tuning strategies and understanding of user preferences. |

| 2024 |

|

Zhaoxuan Tan, Zheyuan Liu, Meng Jiang Proceedings of EMNLP 2024. We proposed Personalized Pieces (Per-Pcs) for personalizing large language models, where users can safely share and assemble personalized PEFT modules efficiently through collaborative efforts. Per-Pcs outperforms non-personalized and PEFT retrieval baselines, offering performance comparable to OPPU with significantly lower resource use, promoting safe sharing and making LLM personalization more efficient, effective, and widely accessible. |

|

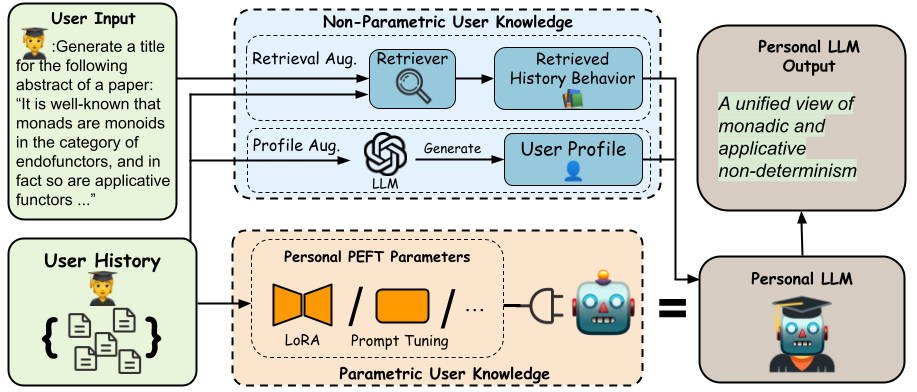

Zhaoxuan Tan, Qingkai Zeng, Yijun Tian, Zheyuan Liu, Bing Yin, Meng Jiang Proceedings of EMNLP 2024. We proposed One PEFT Per User (OPPU) for personalizing large language models, where each user is equipped a personal PEFT module that can be plugged in base LLM to obtain their personal LLM. OPPU exhibits model ownership and enhanced generalization in capturing user behavior patterns compared to existing prompt-based LLM personalization methods. |

|

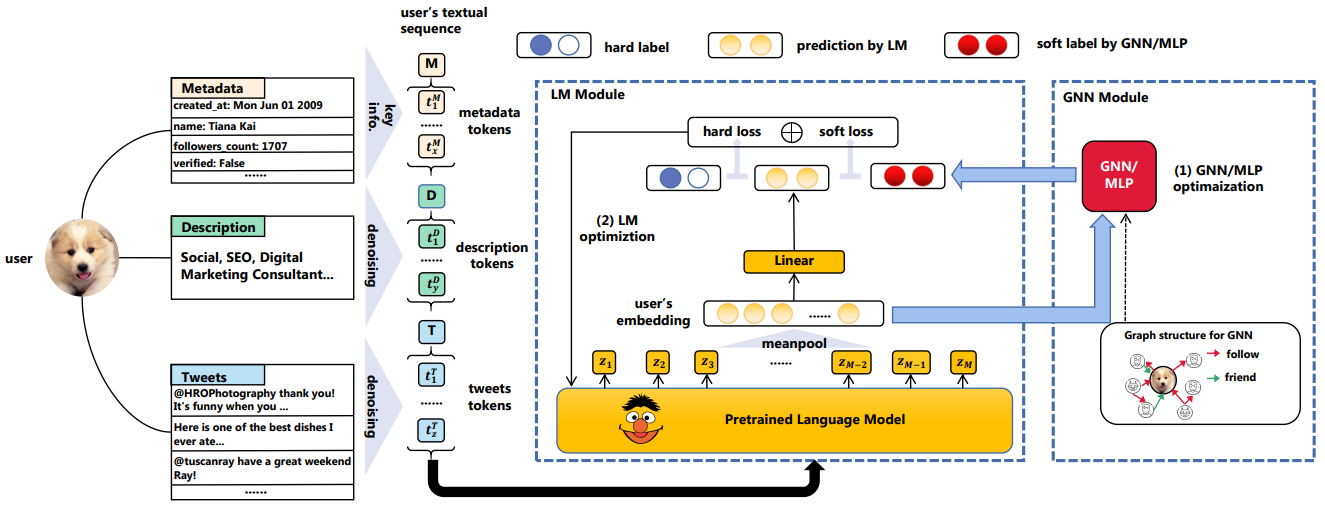

Zijian Cai, Zhaoxuan Tan, Zhenyu Lei, Zifeng Zhu, Hongrui Wang, Qinghua Zheng, Minnan Luo Proceedings of WSDM, 2024. We propose LMBot, which utilizes a language model with graph-aware knowledge distillation to act as a proxy for graph-less Twitter bot detection inference. This approach effectively resolves graph data dependency and sampling bias issues. |

| 2023 |

|

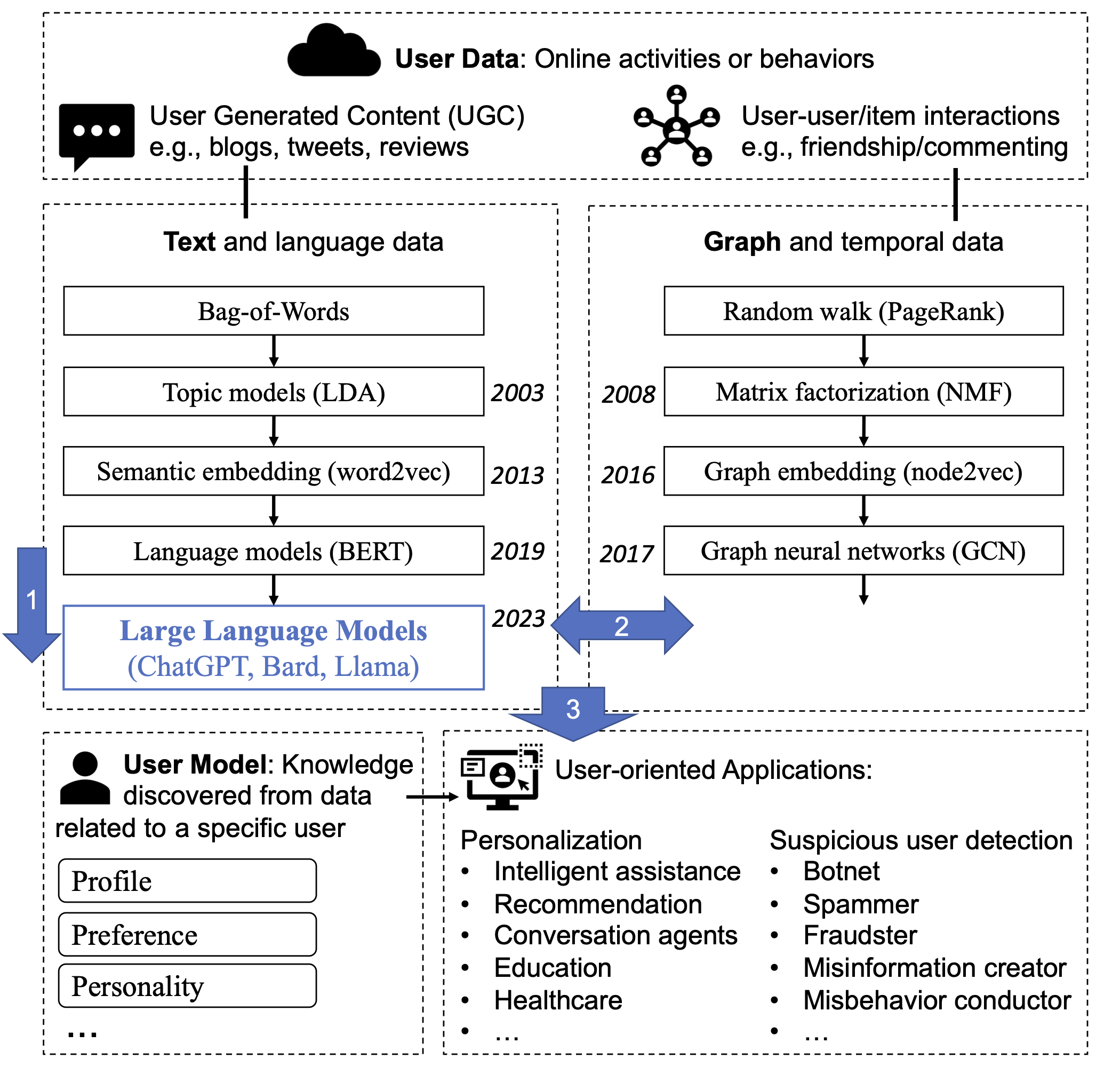

Zhaoxuan Tan, Meng Jiang IEEE Data Engineering Bulletin (DEBULL), 2023. reading list We summarize existing research about how and why LLMs are great tools of modeling and understanding UGC. Then we review a few categories of large language models for user modeling (LLM-UM) approaches that integrate the LLMs with text and graph-based methods in different ways. Then we introduce specific LLM-UM techniques for a variety of UM applications. Finally, we present remaining challenges and future directions in the LLM-UM research. |

|

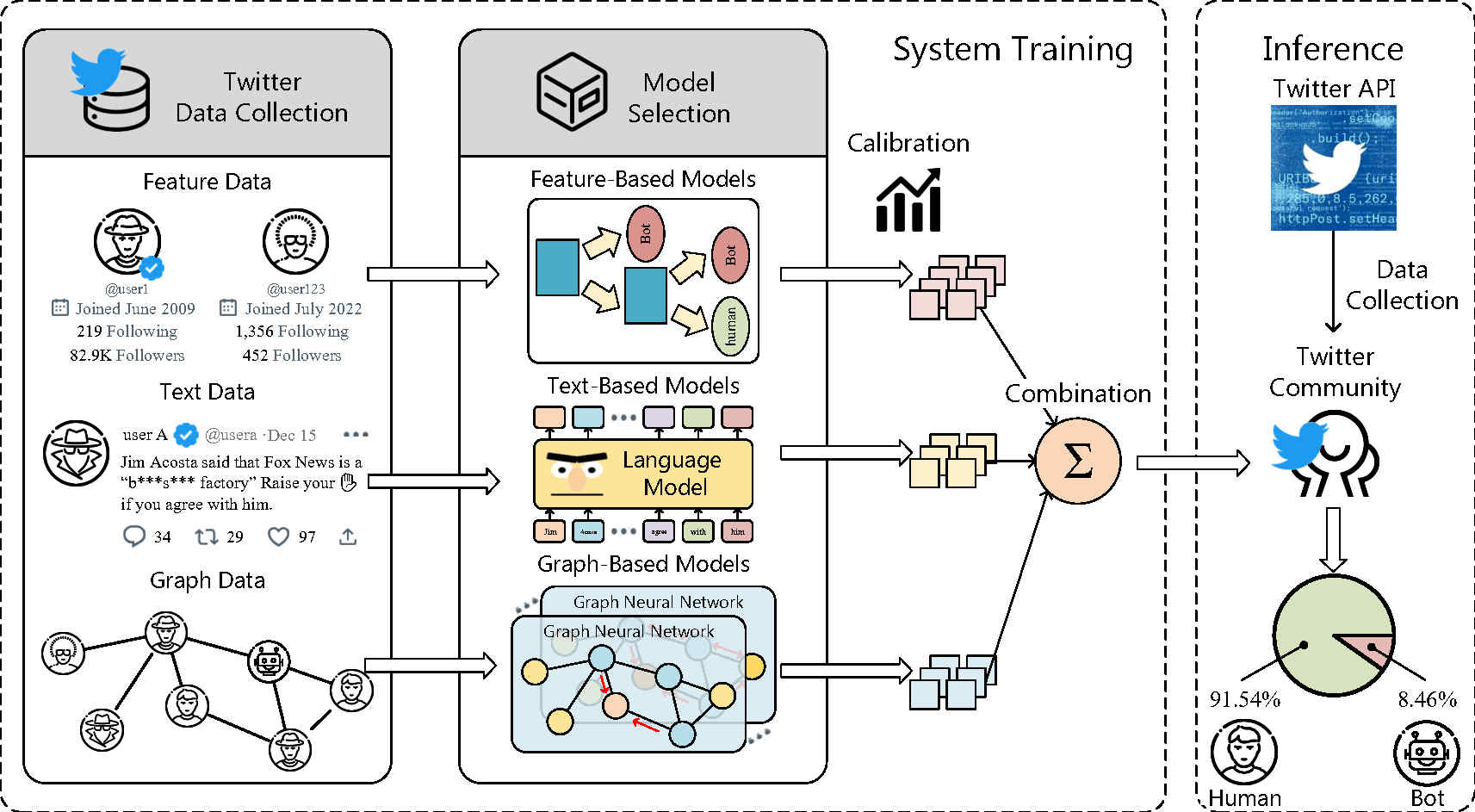

Zhaoxuan Tan*, Shangbin Feng*, Melanie Sclar, Herun Wan, Minnan Luo, Yejin Choi, Yulia Tsvetkov Proceedings of EMNLP-Findings, 2023. demo / tweet We introduce the concept of community-level Twitter bot detection and develope BotPercent, a multi-dataset, multi-model Twitter bot detection pipeline. Utilizing BotPercent, we investigate the presence of bots in various Twitter communities and discovered that bot distribution is heterogeneous in both space and time. |

|

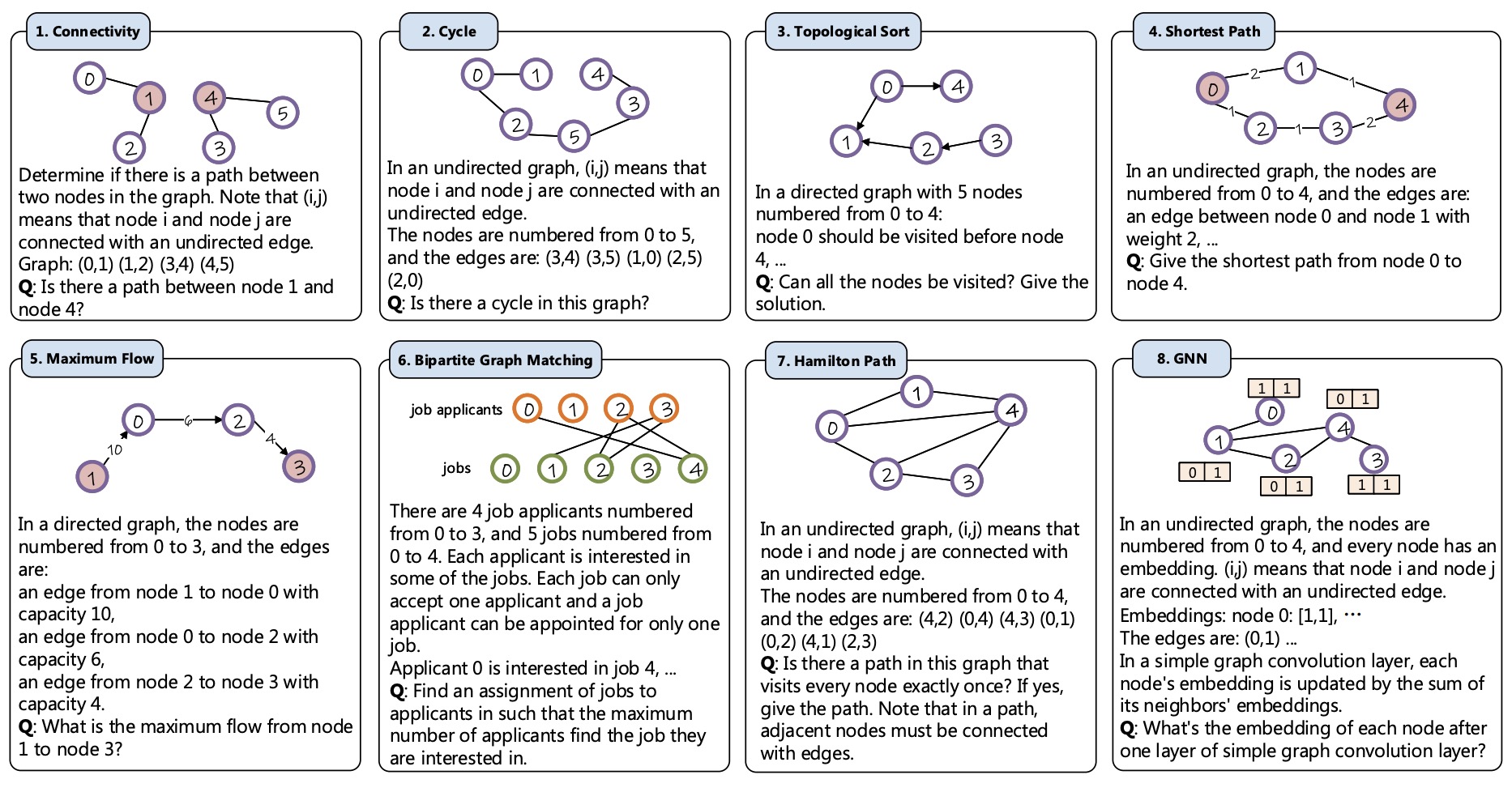

Heng Wang*, Shangbin Feng*, Tianxing He, Zhaoxuan Tan, Xiaochuang Han, Yulia Tsvetkov Proceedings of NeurIPS 2023 (Spotlight) code Are language models graph reasoners? We propose the NLGraph benchmark, a test bed for graph-based reasoning designed for language models in natural language. We find that LLMs are preliminary graph thinkers while the most advanced graph reasoning tasks remain an open research question. |

|

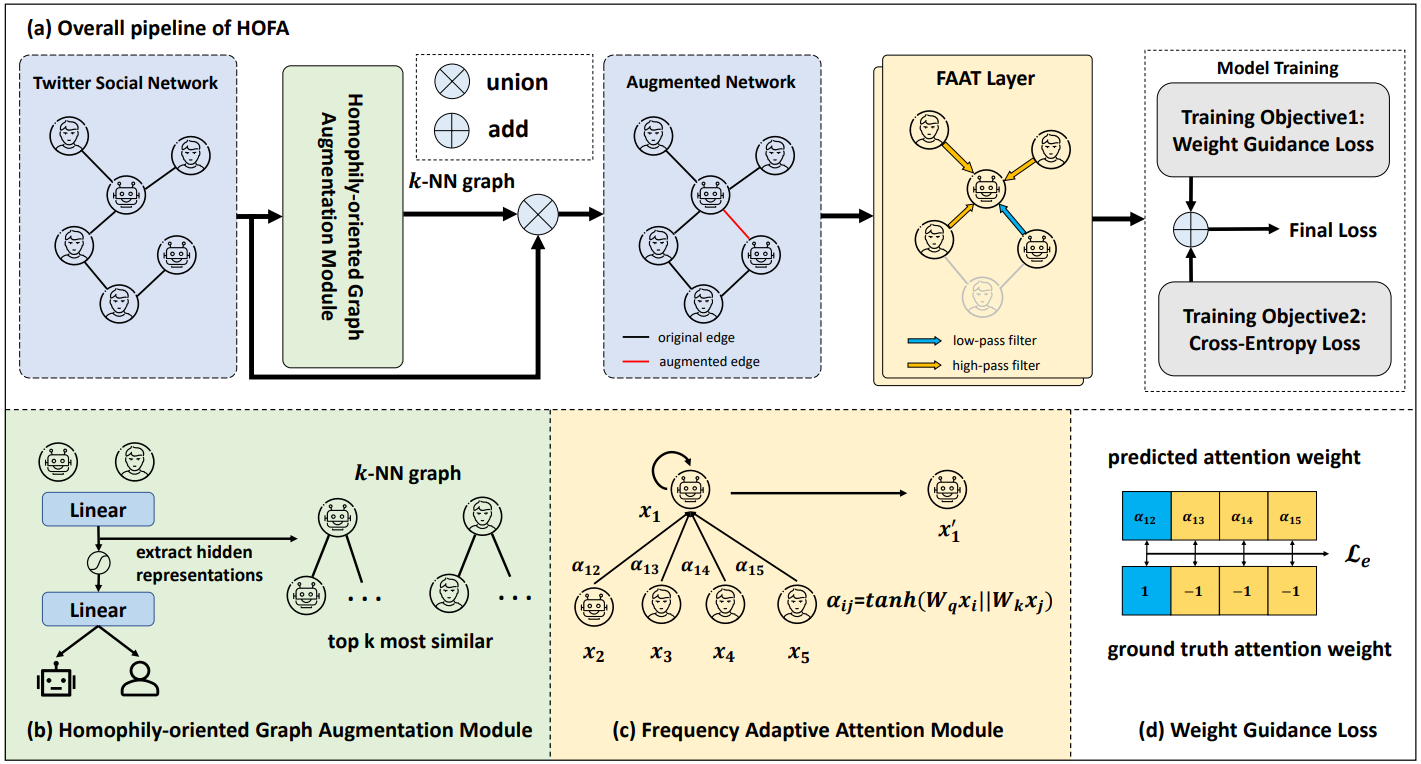

Sen Ye, Zhaoxuan Tan, Zhenyu Lei, Ruijie He, Hongrui Wang, Qinghua Zheng, Minnan Luo arXiv preprint 2023. We identify the heterophilous disguise challenge in Twitter bot detection and proposed HOFA, a novel framework equipped with Homophily-Oriented Augmentation and Frequency Adaptive Attention to demystify the heterophilous disguise challenge. |

|

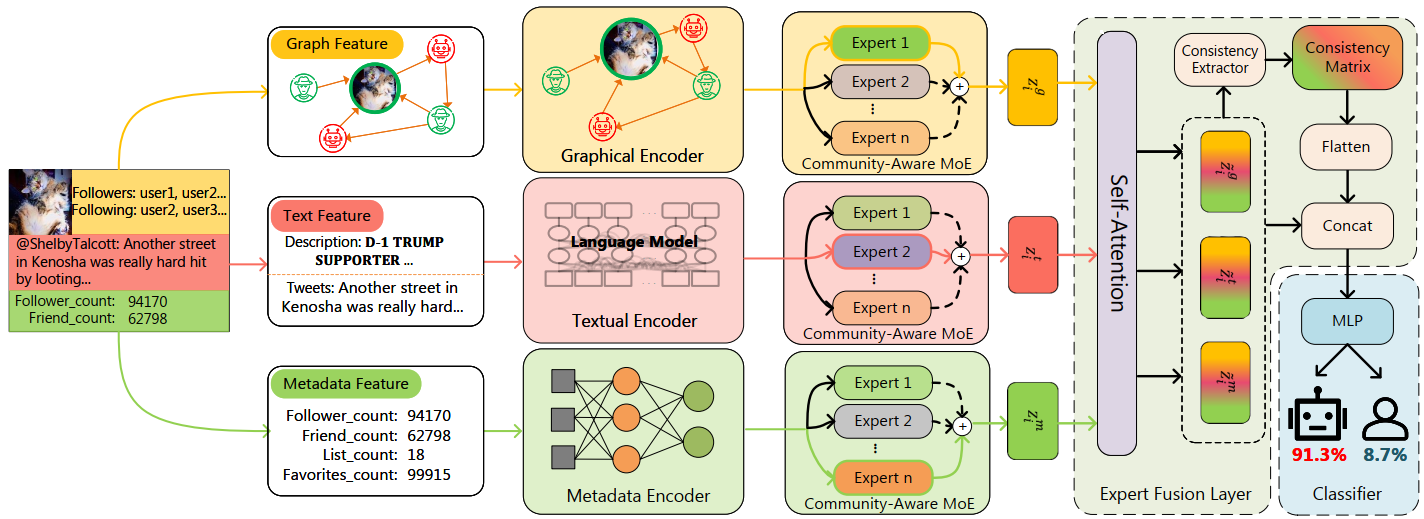

Yuhan Liu, Zhaoxuan Tan, Heng Wang, Shangbin Feng, Qinghua Zheng, Minnan Luo Proceedings of SIGIR 2023. We propose community-aware mixture-of-experts to address two challenges in detecting advanced Twitter bots: manipulated features and diverse communities. |

|

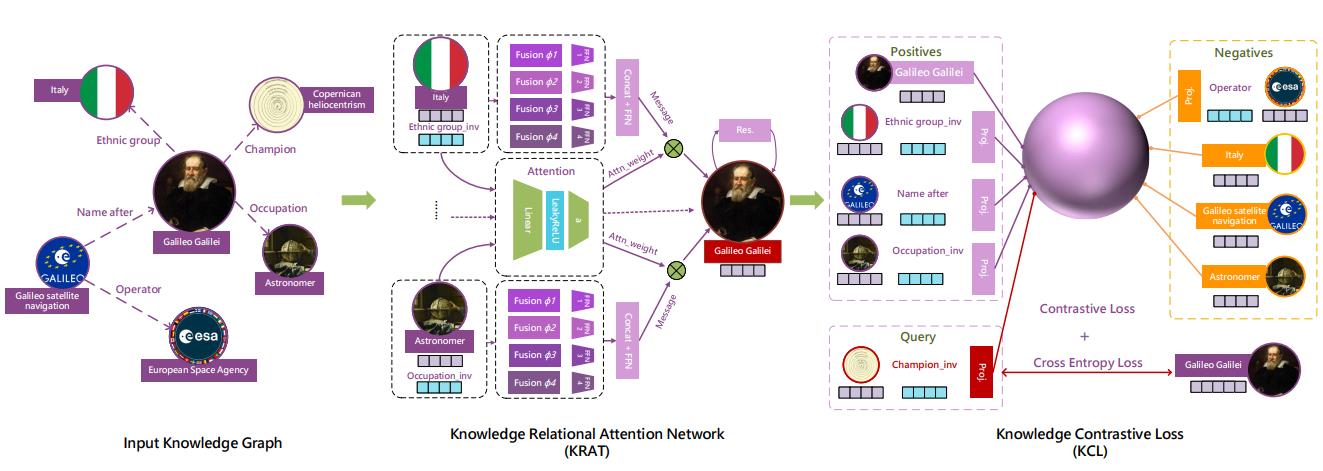

Zhaoxuan Tan, Zilong Chen, Shangbin Feng, Qingyue Zhang, Qinghua Zheng, Jundong Li, Minnan Luo Proceedings of The Web Conference (WWW), 2023. code / talk We adopt contrastive learning and knowledge relational attention network to alleviate the widespread sparsity problem in knowledge graphs. |

| 2022 |

|

Shangbin Feng*, Zhaoxuan Tan*, Herun Wan*, Ningnan Wang*, Zilong Chen*, Binchi Zhang*, Qinghua Zheng, Wenqian Zhang, Zhenyu Lei, Shujie Yang, Xinshun Feng, Qingyue Zhang, Hongrui Wang, Yuhan Liu, Yuyang Bai, Heng Wang, Zijian Cai, Yanbo Wang, Lijing Zheng, Zihan Ma, Jundong Li, Minnan Luo Proceedings of NeurIPS, Datasets and Benchmarks Track, 2022. website / GitHub / bibtex / poster We present TwiBot-22, the largest graph-based Twitter bot detection benchmark to date, which provides diversified entities and relations in Twittersphere and has considerably better annotation quality. |

|

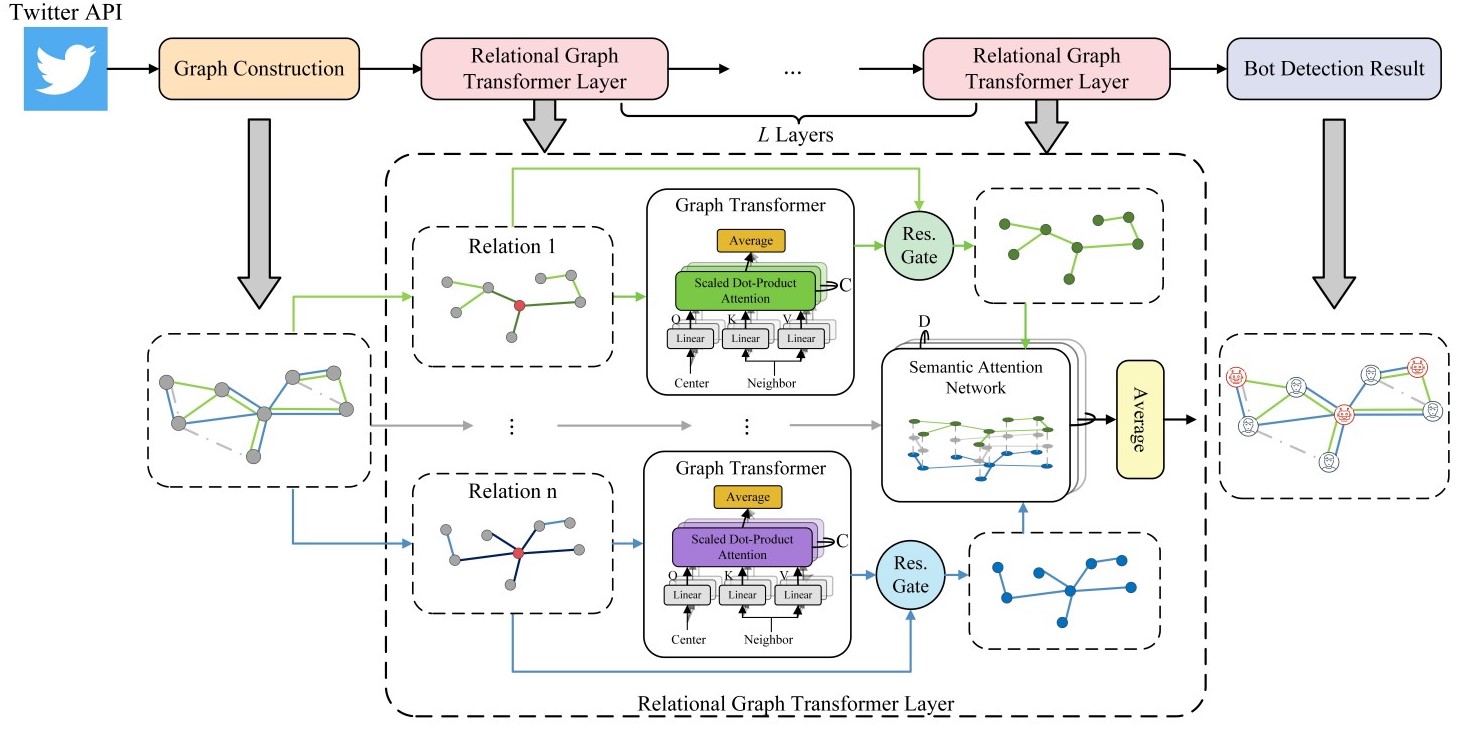

Shangbin Feng, Zhaoxuan Tan, Rui Li, Minnan Luo Proceedings of AAAI 2022. slides / code / bibtex We propose the relational graph transformers GNN architecture to leverage the intrinsic relation heterogeneity and influence heterogeneity in Twitter network. |

|

|

|

Google

2025.10 - 2025.12 Student Researcher @ Core ML Host: Dr. Ao Liu, Dr. Yan Zhu Remote |

|

Amazon Science

2025.06 - 2025.10 Applied Scientist Intern @ Rufus Host: Dr. Zixuan Zhang, Dr. Zheng Li Palo Alto, CA |

|

Microsoft

2025.03 - 2025.06 Research Intern @ Office of Applied Research Host: Dr. Pei Zhou, Dr. Mengting Wan Remote |

|

Amazon Science

2024.05 - 2024.10 Applied Scientist Intern @ Rufus Host: Dr. Zheng Li, Dr. Tianyi Liu Palo Alto, CA |

|

|

|

University of Notre Dame

2023.08 - present Ph.D. in Computer Science and Engineering Advisor: Prof. Meng Jiang |

|

Xi'an Jiaotong University

2019.08 - 2023.07 B.E. in Computer Science and Technology GPA: 89.1 (+3) / 100.0 [top 5%] Advisor: Prof. Minnan Luo |

|

|

|

Template courtesy: Jon Barron. |